Clouds! Obstacles for crop monitoring from space

High-resolution optical satellites such as Sentinel-2 are important in remote sensing solutions for agriculture. Sentinel-2 covers our entire Earth’s surface at least every 5 days to deliver massive amounts of objective data on the state of our crops.

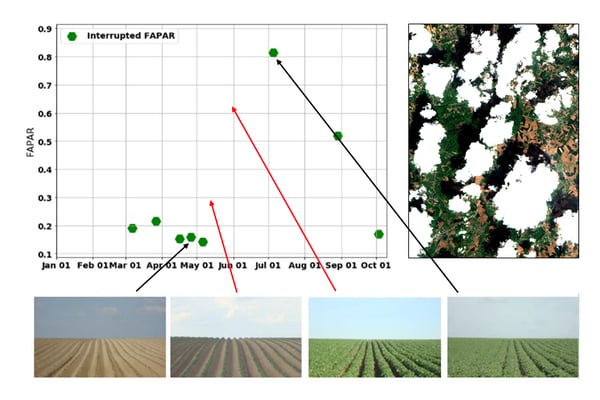

But despite the fact that all these optical data are available, operational agricultural monitoring systems still have their limitations. Optical sensors are unable to look through clouds, resulting in cloud-induced gaps in observations, and hence making it impossible to retrieve the complete time series of a vegetation index. To provide useful information on crop status, it’s important to monitor on a regular basis, certainly during times when conditions on the field change drastically (Fig. 1). The CropSAR technology provides a solution to this problem. By being independent of weather conditions we have more observations during essential growth stages such as plant emergence.

Figure 1: Cloud-free fAPAR registrations (green dots) by Sentinel-2 over a potato field. The red arrows mark important growing season stages without valid retrievals. This imposes serious limitations on operational crop monitoring or properly forecasting its yield.

Figure 1: Cloud-free fAPAR registrations (green dots) by Sentinel-2 over a potato field. The red arrows mark important growing season stages without valid retrievals. This imposes serious limitations on operational crop monitoring or properly forecasting its yield.

Thinking outside the box

CropSAR relies on observations made by Sentinel-1, a constellation of two radar satellites. While optical remote sensing resembles to some extent what we see with our own eyes, it is much more difficult to understand what can be seen from a radar perspective. A radar does not depend on reflected sunlight, but instead sends out its own energy pulse and measures the return signal (‘backscatter’) after interaction with the surface. In the vegetation case, instead of giving an indication on biophysical processes in the plant radar backscatter rather contains information on the structure and moisture content of vegetation and the underlying soil.

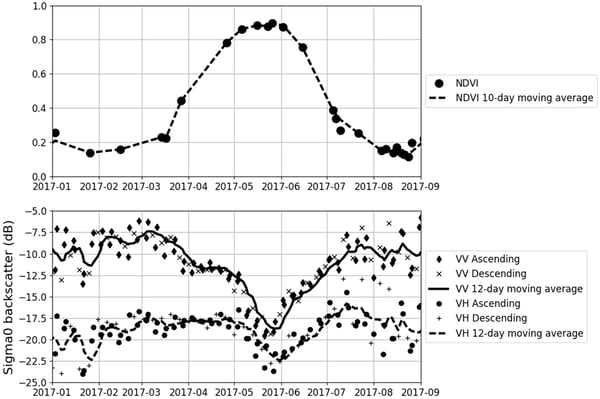

However, even though optical and radar sensors see completely different things, their measurements are nevertheless correlated, as both hold information on the vegetation status (Fig. 2). It’s exactly that correlation that CropSAR exploits to fill in the cloud-induced gaps in our optical measurements.

Figure 2: Sentinel-2 NDVI observations over a winter wheat field and the corresponding Sentinel-1 backscatter signature. While both measurements are completely different, the correlation between optical and radar is clearly visible, especially during the growing season (source). Note that during times of limited vegetation cover on the parcel, the radar backscatter is much more noisy as the signal then reflects mostly the moisture content of the underlying surface.

Combining the best of two worlds

The correlation between Sentinel-1 and Sentinel-2 observations is too complex and case-specific to be captured by a regression model. On the other hand, we do have massive and ever-growing amounts of coinciding Sentinel-1 and Sentinel-2 observations. Using these data together with new deep learning technology makes it possible to exploit complex relationships and combine both datasets.

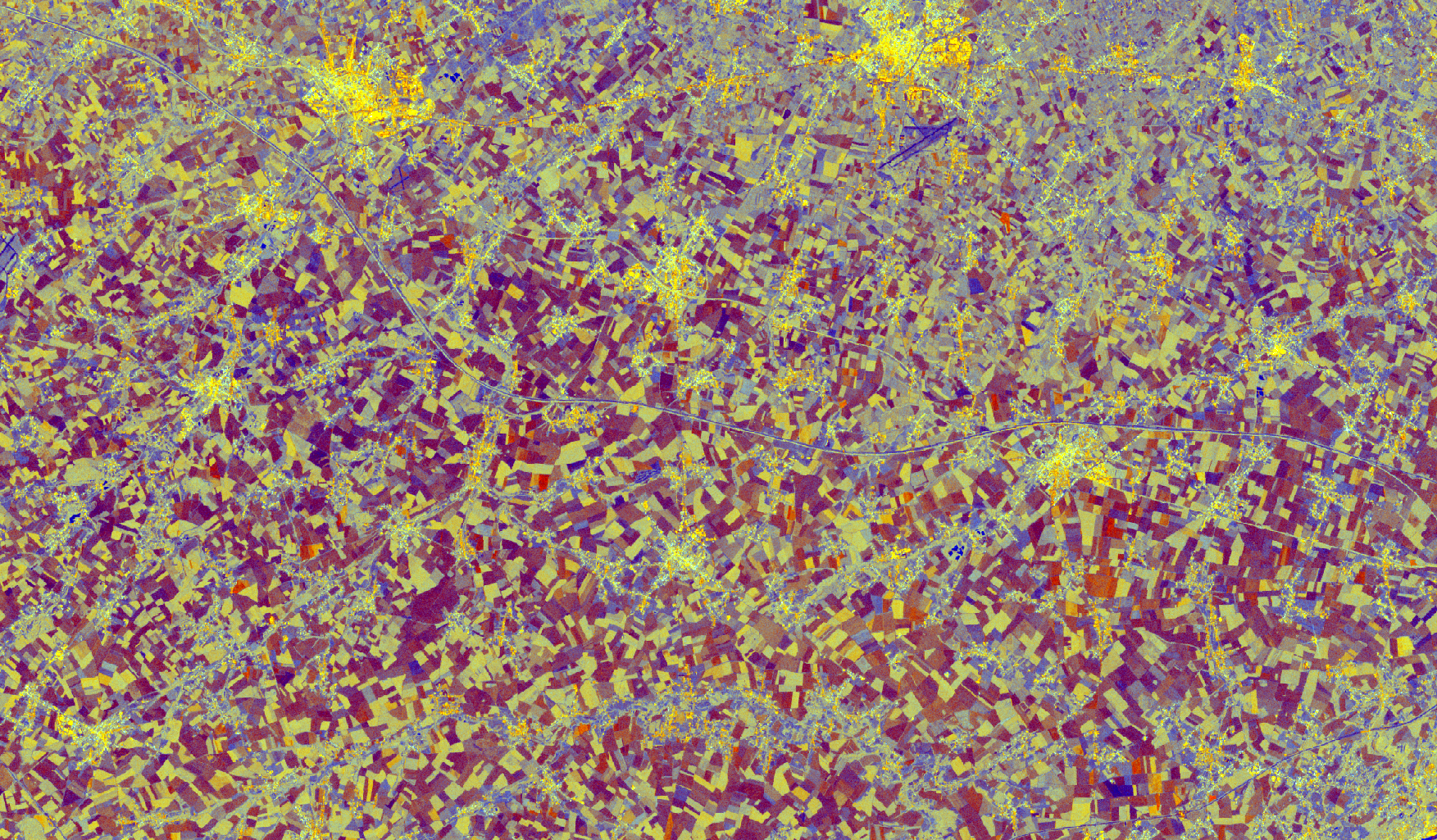

Figure 3: An animation showing a Sentinel-1 multi-temporal speckle filtered image of April (left) and a Sentinel-2 image (right) of 11 April 2019 containing clouds obscuring the view. - © contains modified Copernicus Sentinel data 2019, produced by VITO Remote Sensing

Using a powerful GPU cluster and state-of-the-art neural network technologies, we were able to train an algorithm that can identify the temporal correlation between Sentinel-1 and Sentinel-2 and hence fill gaps in Sentinel-2 using information from Sentinel-1.

CropSAR takes as input cloud-interrupted time series of Sentinel-2 fAPAR and adds uninterrupted time series of Sentinel-1 backscatter. The algorithm then returns completely cloud-free time series of fAPAR together with its confidence level (Fig. 4). This setup enables us to combine best of both worlds: understanding what happens in a plant using an optical image and the cloud-penetrating capacity of a radar. Doing so, we have successfully identified key agricultural events such as plant emergence, canopy closure, and harvest even in cloudy periods, which would otherwise be impossible.

Figure 4: Animation showing how CropSAR works over a potato field. Thanks to the frequent cloud-free acquisitions of Sentinel-1, CropSAR is able to provide daily estimates of the current fAPAR over the parcel, while it builds upon any available cloud-free Sentinel-2 observation that becomes available (green squares). Therefore, the algorithm is self-correcting, explaining why uncertainties can sometimes grow quite large until a new Sentinel-2 observation becomes available, and the uncertainty is much reduced. This is especially true in times of low or dry vegetation cover when Sentinel-1 is mostly reflecting soil conditions, and the confidence of the predicted fAPAR is lower. However, the long absence of valid Sentinel-2 data during crop emergence is here successfully bridged by CropSAR, thanks to the information contained in the Sentinel-1 signal.

Looking ahead

Today, the CropSAR technology is ready to use at field level and fully implemented in our WatchITgrow platform. This means that the method returns an uninterrupted time series for each individual parcel, integrated over the entire field. But we want to go further!

Our next step is to expand the technology to pixel level, filling cloud-induced gaps in optical images with the aid of radar observations. This will allow us to also look at within-field differences in crop status, a prerequisite for precision farming. We believe that this technology will be a clear added value for operational crop monitoring in tropical and temperate regions, where most agriculture takes place. Stay tuned for more!

/Blog%20Post%20Strip%20Cropping%20-%20Featured%20Image%201200x650%20150%20ppi.png)

/Blog_WorldCereal_1200x650.png)

/lewis-latham-0huRqQjz81A-unsplash.jpg)