Detecting marine plastic litter! Where it all started

The use of remote sensing technologies to detect and characterize marine plastic litter is still fairly new. Although the technology is already used in industrial sorting, the application in an outdoor marine environment is much more complex. Several years ago we started with the first experiments in a lab environment to first better characterize the spectral reflectance of marine plastics. It gave us insight on the effects of water, weathering and structure of the plastics. This was an essential first step to develop algorithms for plastic detection and select the most appropriate sensors to be used in the outdoor environment.

We’ve been using this knowledge to process images from both drones and fixed cameras in multiple marine plastic detection projects e.g. AIDMAP (ESA - The Discovery Campaign on Remote Sensing of Plastic Marine Litter ) and PLUXIN. In these research projects we focus on the detection of plastic accumulation areas and individual pieces of plastic litter in rivers. Both can provide important information to understand the pathway of marine litter from source to sink and to support clean up actions.

Plastics trapped in a dead mangrove forest and a beach in Vietnam. Picture taken by Assoc. Prof. Dr. PHAM Minh Hai, Vietnam Institute of Geodesy and Cartography.

Detecting litter accumulations with drones

In our latest projects we’ve been focusing on the development of new remote sensing based solutions to:

- detect litter accumulations,

- discriminate it from its surroundings (being water, sand…)

- estimate the percentage of plastics in the accumulation area

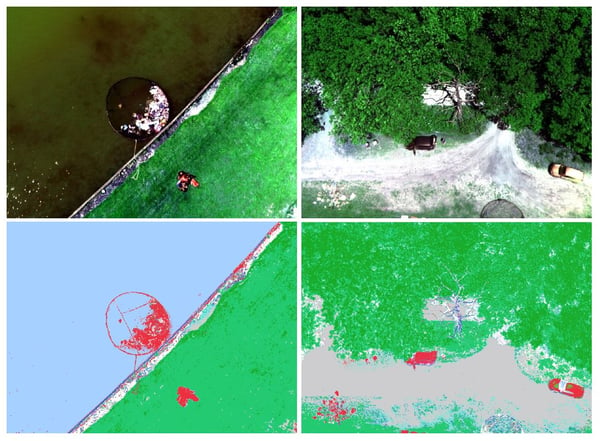

A few months ago, in November 2021, we performed an experiment on the Sibelco lake in Belgium to detect litter accumulations by using a drone equipped with a multispectral camera and machine learning. We placed litter accumulations which were artificially created using weathered plastics from the Port of Antwerp and the Scheldt river, and washed ashore plastics in Vietnam on both land and water surfaces. The litter included plastics, but also pieces of wood and reed.

Below you can see the true color images acquired from the drone over water (top left) and land (top right), jointly with their corresponding litter maps on the bottom row. The most common materials identified in these images are litter (red), wood (blue), water (light blue), soil (grey) and vegetation (green). Shaded pixels are masked and they appear in white (transparent). The approach is fully automated allowing to screen large and inaccessible areas at once.

Individual litter detection and characterization

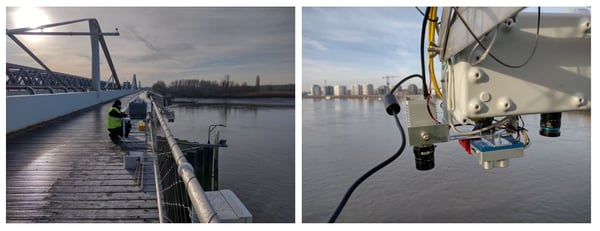

To monitor and characterize individual litter items in waterways, we set up a system with fixed cameras mounted on a bridge to provide continuous monitoring at very high spatial resolution. The first experiments started in 2021 focusing on all macro litter floating on the surface or slightly submerged. In the next months, we will further improve the algorithms to discriminate plastics from other litter.

We performed tests with different types of cameras: a traditional RGB camera, a multispectral VNIR camera and a multispectral SWIR camera. All cameras were integrated into a housing to be attached to a bridge with all cameras pointing to the same location on the river. The SWIR camera was specifically designed for plastics detection by Xenics, a Belgian company experienced in designing and manufacturing infrared sensors. During the development we kept in mind the ideal wavelength selection for plastic detection which was previously defined during the Hyper project. The camera is also kept compact and lightweight in view of additional setups using drones.

Setup of the RGB camera, the multispectral VNIR camera and the multispectral SWIR camera over a bridge.

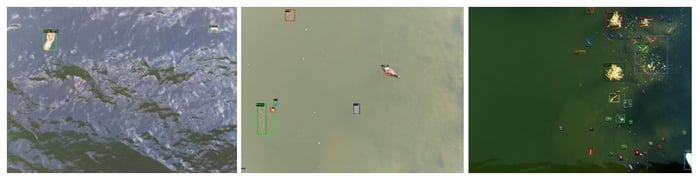

Automated processing chain based on AI

To detect litter we work with an AI model developed for outlier detection in the waterways, finetuned on our collected training datasets. We started with the RGB data. First results showed that litter can be detected with high accuracies (average precision 50 obtained at 88.74 percent). These promising results encouraged us to use this AI model for other data sets with different types of plastics and other camera systems as well. Various experiments show that slightly submerged plastics can also be detected but with slightly lower accuracies (more difficult to discriminate) than the floating plastics. Applying the existing AI model on a new dataset (other area, other camera) provided similar accuracies.

Results for dataset 1 (part of the dataset used for training) and results for dataset 2 (RGB camera) and 3 (new dataset with other camera)

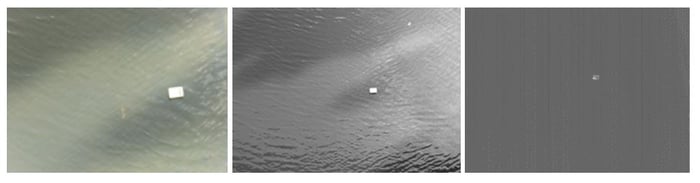

In the upcoming months we’ll further develop the data processing chain. Next steps include the adaptation of the AI model on the multispectral VNIR and SWIR datasets. The figure below shows a plastic sample imaged by the three cameras. Clearly, the resolution of the three camera is different resulting in a smaller object in the SWIR camera. Water absorbs strongly in the SWIR resulting in a noisy background, but potentially higher separability of the plastic object.

Same plastic object imaged with the RGB camera (left), multispectral VNIR camera (middle), and multispectral SWIR camera from Xenics (right).

Feasibility of a dedicated satellite mission?!

Given the challenges in detecting plastic, an optimized approach which combines advanced AI techniques and a dedicated design has the best chance of success. By identifying suitable AI techniques upfront, their requirements can be used as inputs to the mission definition. That’s why we’re also looking into the feasibility to develop a satellite mission dedicated for marine plastic detection (Marlise project). More results will be shared later on so stay tuned.

/Blog_CORSA_1200x650.png)

/lewis-latham-0huRqQjz81A-unsplash.jpg)

.jpg)