Recognizing plant features from the air

With the increasingly widespread availability of affordable professional-grade UAV platforms, various ways to incorporate data from these platforms into plant phenotyping workflows have been researched. One obvious application is being able to recognize plant and plant features from mm resolution imagery. Typically, this has been done by using traditional classification algorithms such as support vector machines, random forests, and others. These algorithms typically focus on differentiating groups primarily using spectral reflectance values in pixels, and occasionally a kernel around these pixels. Such methods are often used to differentiate between classes of interest (e.g. soil vs. plant material, or leaf vs. flower).

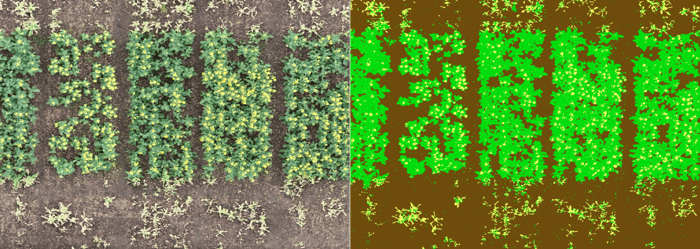

Pixel-based plant and flower classification, showing correct identification of different materials;

but also that it is difficult to group these into accurate object counts

Whilst these algorithms are generally simple and effective for many use cases, they have their limitations when it comes to obtaining an accurate estimation of the number of objects in an image. In the case of plant organ detection, it is difficult to separate overlapping objects using strictly pixel-based classifiers. For detection of relevant plants (e.g. for emergence counting), the plants of interest may have similar reflectance profiles to other vegetation or weeds in the area of interest, obfuscating the count.

Convolutional neural networks for object detection

By using deep convolutional neural networks (CNNs), the issues with pixel classifiers can largely be overcome. This is because CNNs also learn to better identify the corresponding shapes and internal geometries that may, for example, differentiate between two spectrally similar plants. In the case of plant emergence, this can be crucial in separating the plants of interest with other weed vegetation on the site, allowing for more precise counting. For plant organ counting, it is easier to count two overlapping objects separately, as opposed to pixel classification where there is no border found between these two.

Two issues have held back the applicability of object detection for use cases involving high-resolution aerial imagery. Until recently, these CNN architectures typically showed unsatisfying results when applied to images with small objects, as well as densely congregated objects. Both of these characteristics define many agricultural detection tasks. But now, and likely over the coming years, new CNN architectures are fully advancing to overcome these issues so they can be used in these more difficult and specific image detection tasks. By researching which model architectures are most ideal for our use cases, implementing these models, and optimizing their parameters, we have been able to increase accuracy which previously seemed impossible.

Labelling of wheat ears from drone based imagery. Comparison of labeling

results between different operators is used to improve model accuracy

Integrating aN AI workflow into the MAPEO platform

Through the MAPEO platform, a drone-based image processing solution, lots of data has been collected for a diverse array of crops, covering numerous applications at sites across the globe. We recently expanded the functionalities of our platform and integrated an object detection workflow. By setting up a dynamic database of training data, metadata and models linked directly to the UAV image products, we can now work with pre-defined models or swiftly train and apply new models.

The continuously increasing data input sets in MAPEO are advantageous for implementing both general models, as well as site-specific models. For the former case, this is because for most crops, images can vary widely in appearance due to several factors; including crop variety, soil, growing climate, treatments and the presence of other vegetation. If you wish to create a general model for detecting a plant trait, you ideally need to account for these differences as much as possible in the training set, which can be accomplished by collecting information on a wide amount of genetics and environmental situations.

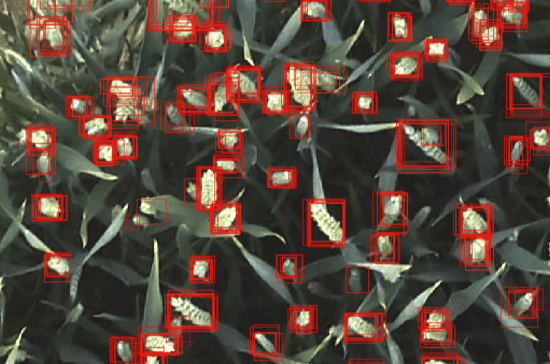

Detection of onion flowers; demonstrating CNN capacity to exclude non-target vegetation,

and to separate between overlapping objects effectively.

Continuously improving the accuracy of the AI models

These general models can then be used to create improved detection models for individual sites or other more specific use-cases. They often improve on a model custom-built using only data from a target site, or those similar to it. Typically, when we apply a pre-built general model to a new site, initial accuracy is already high, with only small amounts of additional data, annotation and training needed to capture site-specific edge cases and to validate accuracy. This has some key advantages over training ad-hoc on a per-site basis:

- Models can be trained much faster, both in terms of labelling time (due to less labels needed), as well as processing time (due to most model weights already being known).

- More data is being used to generate model weights; and a greater variety of edge cases and other object examples, which may not feature in a particular training set, can be accounted for, leading to higher test set accuracy and greater confidence in results.

- Jobs can be more easily incorporated into an automated pipeline, allowing for accurate object detection models to be generated with a lower need for user input.

As we continue to work on our object detection workflows, we will continue to look at ways to improve accuracy even further; by integrating the latest changes in computer vision theory, as well as by gathering and incorporating even more data relevant to several plant phenotyping use cases.

Check also our latest MAPEO stories about ‘Flower count in fruit orchards’ or how Aphea.Bio is using drones to evaluate biostimulants, and stay tuned as more cases and features are coming up later this year.

/Blog_CORSA_1200x650.png)

/lewis-latham-0huRqQjz81A-unsplash.jpg)