Working with high-quality and high resolution imagery

The introduction and dispersal of drone imaging solutions has allowed for easier and higher quality mapping in many domains, with plant phenotyping being one of these. Compared to traditional manual measurements, researchers are able to quickly ascertain the height and coverage differences of crop varieties, view spectral indices to understand plant health, and compare the effect of different diseases or treatments. More recently, ever-increasing computational power and advances in machine learning theory have made direct object detection models for plants and their organs possible. The direct counting of these plant characteristics brings great value as many are closely linked to overall yield, so being able to produce accurate estimates with much lower effort is of great value to researchers and plant breeders.

The biggest hindrance to being able to implement such models, and to implement them at scale, is the lack of high quality, high resolution imagery. Generally, these models will perform best when the ground resolution is in the range of a few millimetres, with lower amounts being better. For most drone cameras, achieving this resolution, particularly over larger fields can be difficult for several reasons.

- The drone platform will be required to fly very low to take the images, which can cause the rotors of the drone to blow down on the crops, affecting the resulting image and possibly damaging the trial.

- Each image will cover a lower spatial extent of the field, which can affect the stitching together of individual images due to a lack of matching features.

- Lower end cameras may produce less sharp images, diminishing the contrast between object and background, making predictions less certain.

PhaseOne camera

At Phase One, we specialize in high-end camera equipment, including for use in several aerial imaging applications. One such camera is the iXM-100. This camera captures images at 100 megapixels whilst also including backlight-illuminated dynamic range lighting and several other features. Compared to other drone cameras, this camera allows users to capture higher resolutions while also covering a wider area and being confident in the resulting image quality. This makes it an ideal camera to use for object detection, as it is possible to capture the necessarily high resolutions of only a few mm per pixel without the risks of having the camera fly too low.

For reference, a commonly used professional-grade drone camera of 20.8 pixels would, to achieve a resolution of 1 mm, be required to fly at a height of 5 metres and cover a 5.3 by 4.0 metre area. To contrast, the iXM-100 can cover over double the area (11.7 *8.8 metres) for the same resolution, whilst flying at a height of 17 metres. This height and area coverage will negate the risk of flying too low, maintain enough feature variability to match images and create an orthomosaic overview of the whole field.

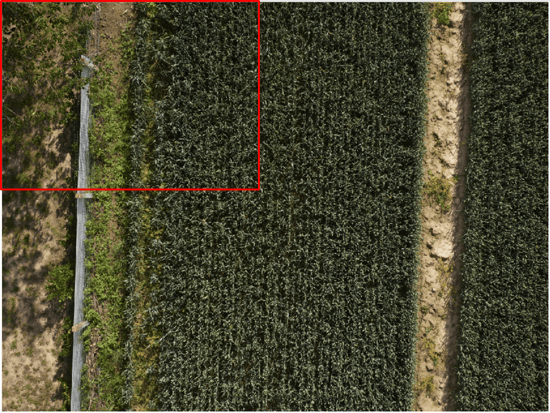

Single image captured with iXM-100. Red bounding box overlay shows expected coverage for an image taken with a 20.8 megapixel camera at the same resolution. In some cases, such a camera might not have additional features outside of vegetation which can be used to match points on images.

Training and running object detection models

Sam Oswald, remote sensing expert agricultural monitoring explains more about the drone-based image processing solution ‘MAPEO’: “Collecting high-quality, high-resolution images forms the basis for object detection in crop fields. Following this, data must be incorporated into a machine learning model, either for training (where human annotators tag objects within individual images), or for inference (where objects are predicted using a trained model). Doing so can be costly in terms of both time and effort, so end users may prefer to utilize a platform set up to handle their specific use cases. That is why we develop MAPEO, an end-to-end image processing platform which includes object detection workflow specifically for phenotyping use cases, including plant emergence and organ counting.”

This object detection workflow is ideal for phenotyping use cases for several reasons:

- Utilize end-to-end image processing workflow to create consistent products which will form the basis for object detection, such as orthomosaics.

- When new training data is ingested, it is aggregated with already existing training data for that crop. Over time, this will allow the model to become better and better at generalizing, capturing multiple crop varieties, edge cases, and background environments in the model weights. For new jobs, this will also result in less and less data being needed to be put aside for training, and subsequently faster inference.

- Model architecture and augmentation is configured to perform best in the phenotyping use case, where objects are generally small, and often overlapping each other.

- Training data, models, and outputs are stored in a standardised way; allowing for easy transferability in the future when re-training or improving models or exporting outputs into other workflows.

- Post-processing to remove overlapping boxes when stitching images into orthomosaic.

Data science

Following training and inference, detections are presented for the target site via a personal dashboard on the MAPEO platform, along with other products of interest. This allows easy visualization and comparison of object counts with other plant health indicators. The platform also allows for easy export of data, either in the form of layers, or by showing statistics over a target plot. This can be useful for extracting organ density, for example the amount of wheat ears per square metre in a certain trial plot and comparing these trials.

Visualization of wheat ear objects detected on MAPEO, going from an overview of the detections over the entire site till wheat ear detection close-up.

Finally, the standardized storage of detection outputs in the platform allows this process to be scaled up easily. Users can easily incorporate new jobs into the platform for additional object detection and integrate the results into existing workflows including external data in a programmatic way. This goes a long way to overcoming the key difficulties with implementing object detection into drone-based image mapping workflows, and allowing the powerful insights this technology generates to be used to improve research outcomes.

Phase One

Phase One

.jpeg)

/Blog%20Post%20Strip%20Cropping%20-%20Featured%20Image%201200x650%20150%20ppi.png)

/Blog_CORSA_1200x650.png)

/Blog_WorldCereal_1200x650.png)